Developing the matchmaker system for Myridian: The Last Stand - Part 1

One of the tricky points in the development of our game was the matchmaker. For those who don’t know, this is the system that puts users in rooms, based on characters, skill and other information.

There are several matchmaker systems on the market, however they are very simple, they just receive information and organize players based on skill levels, number of wins, etc. We wanted a system that would coordinate the entire flow of information, prepare the rooms, then allocate the server and receive the match information. Usually in games like League of Legend or Fortnite, among others, the matchmaker just connects players to the game server, which in turn interacts with players to create teams and configure characters, among other things.

Our plan was for the game server to be as simple as possible, so all the described logic was implemented in matchmaker. In addition, it is much simpler, easier and cheaper to hire people to work with cloud providers and develop in Typescript than to work with Blueprints in Unreal Engine. So that was our first high-level architecture decision.

We then divided the development into two parts, one dealing with the clients’ connection to the system (let’s call it the front-end) and the other processing player requests (let’s call it the back-end).

Front-End

We developed three versions of the front-end, the first being created as a REST API called by the client, with REST endpoints in API Gateway invoking Lambda functions. This version worked very well, but the client accessed the system via HTTP call pooling, so there was a delay of a few seconds for the information to reach the client, in addition to multiple system calls for the entire time the player was connected. Although the API was very simple, there was this performance issue, but what fostered the evolution was the fact that the matchmaker could not actively communicate with the clients.

In the development of the second version, we used a publish-subscribe system from a company called PubNub, but during the tests we had delay and performance problems, with messages taking too long to reach the clients, so this version was quickly discarded.

Then we thought about the current version, which uses WebSocket also managed by API Gateway. In this way, the clients only send a message requesting a match, and the system actively sends the following messages to the clients:

- Match created by the system

- Team chosen by each player

- Hero chosen by each player

- Confirmation from each player

- Game server connection information

This way, there is no more pooling, which reduces the number of calls to the system and the cost, in addition to simplifying the logic on the client side.

Back-End

The backend logic had two versions. The first was a Typescript application executing complex queries in DynamoDB, running periodically on a physical server also allocated to AWS. While it worked flawlessly, having a physical machine running such a small application was a waste of money.

We wanted all the logic to run in the Lambda environment, but there was an important technical issue: the minimum time between runs for scheduled tasks in AWS Lambda is 1 minute, which is unacceptable, as users would have to wait well over 1 minute to enter a match, considering all the time needed for player choices, server allocation and client connection.

We had three options to reduce the time between matchmaking runs:

- A Lambda function executing logic multiple times during the execution minute, waiting a few seconds between each execution;

- A Lambda function sending scheduled messages to a Simple Queue Service queue, with the logic performed by the queue handler;

- DynamoDB Stream handling elements with DynamoDB TTL (time-to-live)

We decided to use the second option due to the lower cost (shorter execution time), simplicity and also because it is an option not yet analyzed by other specialized blogs in this subject. We configured the scheduled function to send 6 messages with a delay of 10 seconds in between, so the matchmaking logic would run every 10 seconds.

Another task is used to handle the behavior of the room after its creation, waiting for players’ choices or configured timeout, in which case random choices are forced upon the player. This task is performed periodically per created room, and only lasts from the moment players enter the room until they connect to the match server.

Call Flow

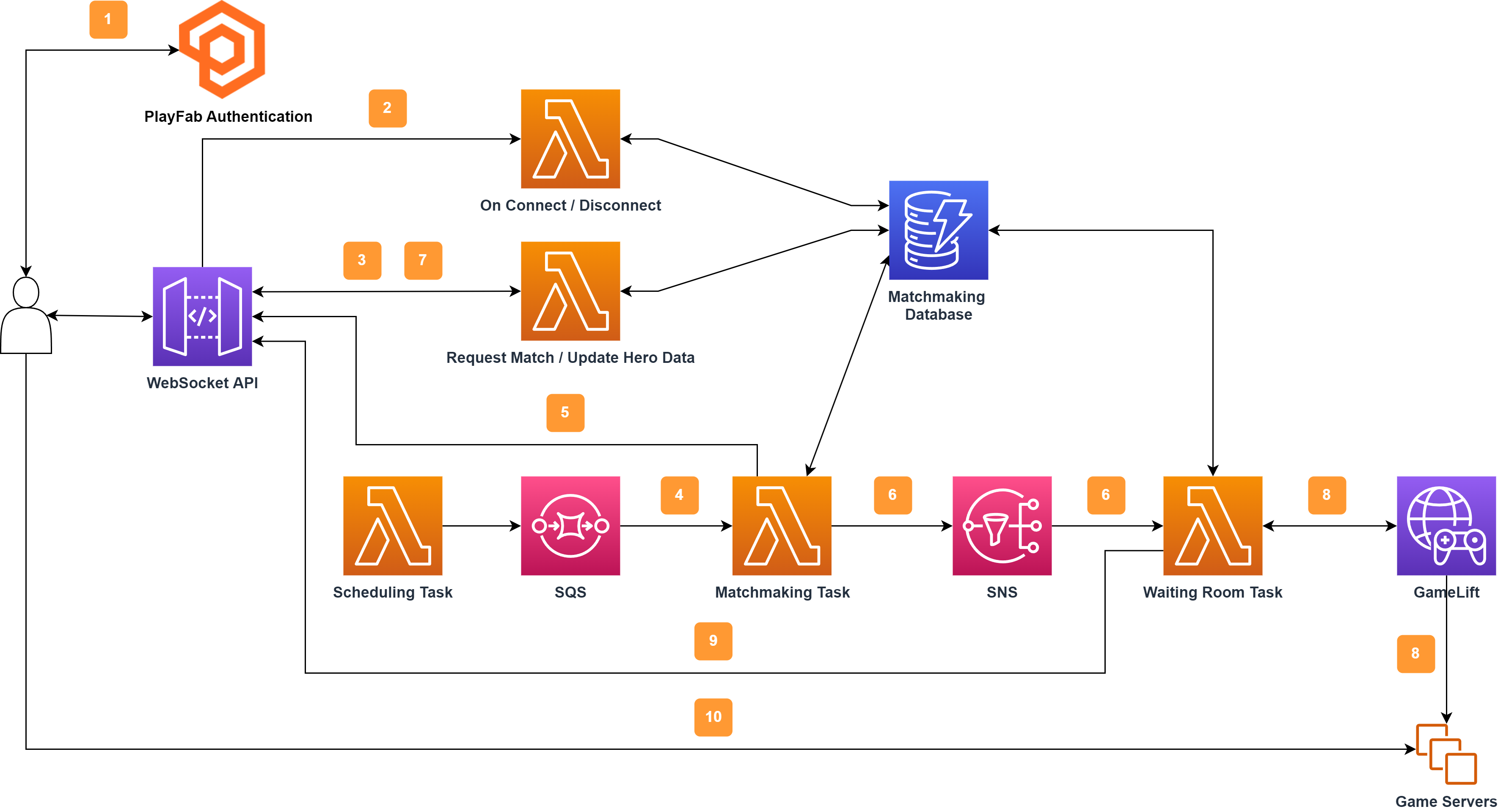

With the system described, the application flow is as follows:

- Client logs into PlayFab and receives a session ticket

- Using the session ticket, the client connects to the WebSocket API, which then verifies the ticket

- Client requests a new match; the system stores the request in the database

- Periodically, the Matchmaking Task queries requests from customers, and groups them into a room

- Matchmaking Task notifies the client that the room has been created

- Matchmaking Task creates the task that monitors the room and handles players’ choices

- Client sends team, character and build choices; the system broadcasts information to the other players

- After receiving all data from the players (or after a certain timeout), the Waiting Room Task asks GameLift to allocate a server passing the players’ choices to it

- Waiting Room Task sends the game server’s connection information to the Clients

- Clients connect to the server and the match starts

In this article, we focus on the high-level architecture and the AWS services used. In the next post we will talk a little bit about code, optimizations of DynamoDB queries and the team selection functionality, which was the last addition to the system. See ya.